Reconciliation: From Boring Necessity to Key Business Function

By Keith Whelan, Managing Director, EMEA

Reconciliations have always been viewed as an unavoidable obligation when it comes to running an operations department. Originally they were implemented purely to exercise and evidence control. The world has changed however: financial instruments have become more complex, regulation more onerous, and the amount of data firms need to manage is growing exponentially.

In response, forward thinking firms are now leveraging reconciliation processes to drive real business value, such as deriving analytics, improving inefficiencies and optimising risk management.

The growing importance of reconciliation

20 years ago reconciliations were mainly used to match simple stuff, such as cash transactions with a small number of fields and fairly non-complex data. But after a while, their use was expanded to provide critical support to new revenue generating opportunities.

This was particularly apparent with the proliferation of new products dreamt up and traded in the OTC space in the early 2000s, where new strategies were shoehorned into systems that weren’t sophisticated enough to cope.

As a consequence, multiple front end systems for different asset classes were used to capture the various legs of the trade. Reconciliations became a key control in obtaining approval from the new business departments set up to mitigate the risks the new products were likely to introduce. Typically the reconciliations were based in Microsoft Excel as building them with in-house tools would have taken several months.

Additional complexity and volume

Use cases for reconciliations have continued to grow up to the present day, although the focus has shifted from managing new business opportunities to regulatory compliance and cost control.

Under MiFID II, reconciliation is a mandatory requirement for transaction reporting. This is all well and good, until you consider the quantity and type of additional data required to report correctly. For example, highly sensitive data – such as dates of birth and passport numbers – is needed to identify the decision makers and executors of a trade.

This data is usually stored in HR databases and not in firms’ trade capture systems. Consequently the output from the front end system needs to be enriched with information from additional sources, and that’s before taking into account the smorgasbord of LEIs, ISINs, MICs and CFIs required for each report.

All this data needs to be normalised and reconciled somehow, which is a complex and difficult process to achieve without expert help. For more detail on this, see our guide to MiFID II data reconciliation.

Manual processes fill the gap

Financial institutions are facing a squeeze where they not only have more complex reconciliations to run, they also need them to be live rapidly. Speed to deploy has always been critical as it ensures businesses can exercise control and scale more easily. However, this has taken on further importance with fines being levied by regulators for firms failing to meet mandatory deadlines.

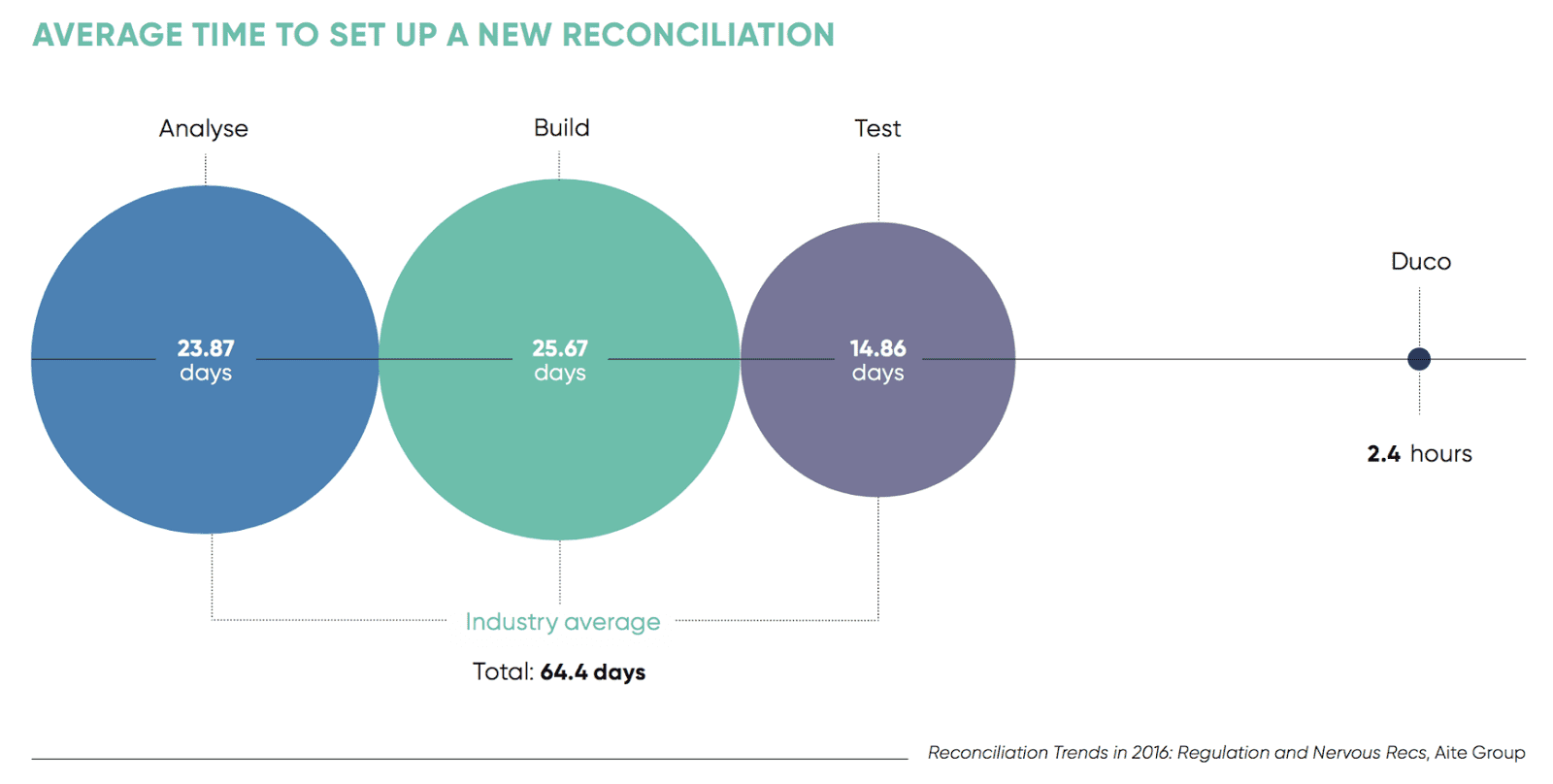

Traditional solutions have not kept up with the pace of change particularly well. Aite Group’s Reconciliation Trends in 2016 whitepaper last year stated it takes on average more than 64 days to set up a single reconciliation (FYI – at Duco our average is 2.4 hours).

Since this process is generally so slow there has been a proliferation of Excel and other end user developed applications (EUDAs) to plug the gap. These manual controls introduce different risks to the process ranging from lack of an audit trail to key man dependencies.

Future direction

In today’s world, financial institutions need tools that are both able to provide an enriched reconciliation function and to future proof the organisation against a rapidly changing data landscape.

The old ways of reconciling data are clearly unable to deal with this. Traditional systems and manual workarounds are too slow and/or unscalable. Only once these issues are addressed can firms start to leverage the vast amounts of data pumped into reconciliations to drive value-add functions such as analytics or business intelligence.

At Duco we recognise this and have spent considerable effort attacking some of the major pain points:

- Installation – on-premise systems can take months to install, while Software as a Service (SaaS) tools are essentially available on demand. For example, at Duco we are committed to getting all clients live within 24 hours.

- Data ingestion – getting data into traditional systems is often a huge headache, requiring extensive ETL or data transformation projects. This has been an industry problem for decades and is a tough nut to crack! We decided to tackle the issue from first principles, leveraging computer science research to create a data agnostic platform. This means users can simply upload their data without pre-formatting, often cutting the ingestion process from days to seconds.

- Matching rules – the traditional model is for operations staff to analyse the data, write a business requirements document, hand it over to IT for the build, wait for the build to be complete, then test. If there’s an issue, the whole process needs to start again. At Duco we’ve focused on self-service, enabling business users to write rules in English sentences using our unique “Natural Rule” language. As this process is so straightforward, iterative test cycles can be carried out on-the-fly with no development projects required.

- Scaling up – adding large data volumes or increased complexity to a traditional system can often be more trouble than it’s worth. There can be hardware concerns, integration issues and new data types to deal with. However, with SaaS and self-service tools, hardware isn’t a problem and onboarding new business lines suddenly becomes far less daunting.

The upshot is firms can now set up reconciliations more quickly, react to change in an agile manner and remove dependencies on manual work. It then becomes easier to work with data and derive more value from it.

What is clear is the amount of data that firms need to handle will continue to rise. Real competitive advantages will depend on a firm’s ability to handle and use that data, rather than being swamped in a mire of conflicting legacy systems. It is time for the new wave of agile reconciliation technology to earn its place in the sun.