Agile, not fragile: how to automate the business without breaking your control framework

By Justin Hingorani, Head of Platform

Imagine a world where you don’t have to choose between agility and governance. One where your operations are fast, lean and protected by all the correct checks and balances.

This isn’t the case for many financial firms today. Governance frameworks are supposed to protect your business, but they’re often defined and confined by other factors. These include the need for rapid time-to-value, constrained IT budgets and the limits of legacy technology.

IT is in control of automation – a key feature of agile operations – for practical reasons as well as concerns about governance.

Innovations in the no-code technology space have raised interesting possibilities for firms. No-code platforms give end-users the ability to impound their knowledge directly into automation. The result is that operations teams can be much more agile.

Some firms, though, wonder how they can ensure strong governance without IT serving as the gatekeepers to change. However, when adopted alongside best practice, no-code data automation actually plays a key role in strengthening governance controls, not undermining them.

Let’s take a look at why.

Governance is out of control

It’s 2023 and yet financial services firms still have a spreadsheet problem. Manual efforts remain too high, even though data volumes and complexity are surging.

The challenge is that the domain knowledge for a particular process that needs automating sits with the business users. But they can’t build the processes themselves because the systems they are using are far too technical. Users therefore need to work with IT and other divisions, meaning even minor changes can turn into days or possibly weeks of work.

This, theoretically at least, ensures that any automation is rigorously tested and approved and gives visibility across what the business is doing.

However, there are plenty of instances where business users simply can’t wait that long. This could be because you need to introduce controls quickly, such as in the event of an audit.

Often, it’s because data arrives in a format that is incompatible with legacy systems, or is particularly low quality. The amount of extract-transform-load (ETL) work required to get this data into your current systems could be prohibitive.

It doesn’t have to be a new requirement, either. Making changes to existing automations is just as complex.

Even following the specifications process doesn’t mean the business user won’t still end up with a spreadsheet. There can be elements to the data that aren’t immediately obvious. For instance, new data quality criteria that apply to a subset of the data can arise after the requirements document has been written. Automations can be built to specification and tested but still be unfit for purpose.

And then of course there are the fringe use cases where the volume doesn’t justify the expense of an IT project. Or there could be no budget for the new process.

When the choice is between observing the proper governance and meeting the immediate needs of the business, the latter usually wins. This is the reality most firms are stuck with. But they needn’t be.

Video: the spreadsheet problem in financial services

Empowering business users to automate their domains

Firms are wary of end-user computing, which is hardly surprising given the truly disastrous results of some spreadsheets. For example, one of our customer’s pre-existing spreadsheet reconciliations contained an error that was costing them $3 million annually.

The world of financial services is full of multi-million or even multi-billion dollar losses caused by spreadsheet errors or lack of proper oversight.

Many firms seem to have accepted some risk of errors as a necessary evil in order to get things done, but often underestimate the risk. But this can be avoided. The advent of no-code tools has made it possible for business users to achieve ever more sophisticated automations, much faster, with appropriate governance and controls to mitigate risks.

No-code tools do away with the need for business requirements documents and handover processes with IT. Tools like Duco, that harness natural language processing, allow business users to create complex data transformations and reconciliations in hours. This allows for rapid time-to-value.

Some are understandably wary of such a concept, given that a user with a spreadsheet could cause millions or billions of dollars worth of damage. What could full-scale end-user automation do? How do you give business users the tools to impound their domain expertise into automation while also ensuring the correct checks and balances are in place?

No-code data management technology is actually the answer, not the problem.

No: code, Yes: control

While opaque spreadsheets are the enemy of strong governance, no-code automation can be its ally. It’s a vital part in creating trust in your data. This has a strategic benefit on top of its usefulness for compliance. In fact, the top frustration for many people I speak to in the industry is the inability to trust data.

A key goal of any data system is to provide users with access to trustworthy datasets. To ensure this, the data must go through rigorous checks, balances and enrichment to guarantee its integrity and appropriateness for a user’s specific needs.

The following are four key ways that no-code tools can aid and strengthen your governance efforts.

Transparency

The whole idea behind no-code tools is that you don’t have to have any technical knowledge to use them. Processes are self documenting. It’s therefore easy to decipher the logic of the transformations your team is building. This makes everything from four-eye controls to auditing much simpler.

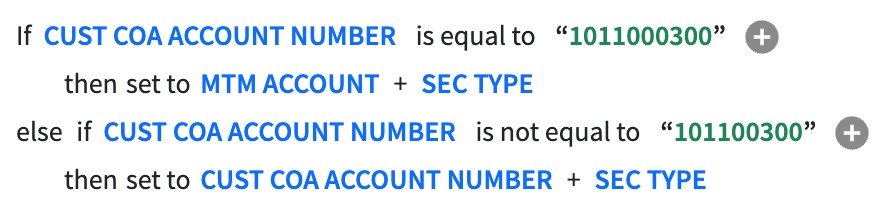

Here’s an example of an actual data transformation rule written in our proprietary Natural Rule Language (NRL). As you can see, the structure of NRL means the meaning of the rule is clear and essentially self-documenting:

The lack of control in Excel can result in a user expressing the same rule in a formula that obscures its meaning:

The difference is clear: with no-code, there’s no need to de-code.

No-code tools enable you to consolidate and standardise

Users don’t need to resort to spreadsheets when they can use no-code tools to create even complex data transformations. These tasks can all be automated on the same platform. All your processes are consolidated in one place, not spread across tens or hundreds of manual workarounds.

This allows you to gain a holistic view of your data and its journey through your organisation. It also enables process standardisation.

Of course, to ensure you get the full benefit of this there are other features you should look for in a no-code platform.

These include flexible ingestion capabilities that streamline and consolidate inputs and enable modification of inputs without the need to rebuild, metadata capture to feed visualisation of data lineage and API connectivity for downstream reporting for those all-important insights.

And this all needs to be backed up by a strong matching engine to ensure you aren’t sacrificing match quality for agility.

Context

One of the key factors in determining data appropriateness is its context. For example, contextual information, such as the who, what, how, why, and when surrounding a credit card transaction must be appropriate if it is to be used in identifying suspicious or unusual activities for fraud prevention.

This could include the payment card used, the location and time of payment, and other relevant transactional information.

However, the context of data can be difficult for users to understand, especially when it’s spread across multiple systems or hidden in a spreadsheet. As explained, transparency of data’s journey (its lineage) and the use of standards help build contextual understanding.

Empowering business users who have a deep understanding of the data also provides the opportunity to enrich its meaning by, as shown in the credit card example, associating it with related data possibly from different systems.

Only then can context be fully harnessed so users can trust and make informed decisions about the data they are using to build knowledge and insights.

Scalable governance

Writing automation for business departments is hugely labour intensive. An individual in IT can service maybe a handful of business users, creating the automation that they need.

End-user automation changes the governance burden on IT departments from overseeing development and implementation to an operational management strategy. They are no longer required to operate the technology and road test every automation. Rigorous development and testing standards should still be followed (see the next section), but users are able to do this themselves.

Instead, their priority is to maintain what we call ‘operational governance’. This means ensuring that all the correct checks and balances are in place as individuals go through their automation journey.

That’s a much more scalable role for technology divisions. This kind of governance can scale across tens, hundreds or even thousands of users.

Governance best practices for no-code automation tools

No-code technology has some notable benefits for governance all by itself. But it’s when you combine this functionality with best practices that you unlock major improvements to your control framework.

Firstly, users need access to separate testing and development environments. This means that they can build their transformations in controlled and safe spaces. Processes are only promoted to production once they have been tested and four-eye checked.

You should also have clearly defined roles and responsibilities that are baked into the platform itself. You want a solution that enables you to set permissions at a granular level. This means you can control which teams and users can access certain data and what actions they can perform.

It’s important that your solution tracks and produces an audit trail of all the changes too. This means you can clearly show what has happened to your data while it’s been on the platform.

Finally, the system should be self-documenting. This means it is designed in a way that removes the need for external reference materials and enables use of the system with no preexisting knowledge.

Natural language rules do this by using plain English sentences to explain what’s happening. It’s by being self-documenting that a system becomes easily accessible to both users and external parties, such as auditors.

Discover more features of a best practice no-code data automation platform.